Imagine a robotic arm that not only slices a tomato into perfect, uniform pieces but does so across multiple successive cuts—without ever needing manual alignment or specialized labels. Achieving this level of precision and adaptability for deformable materials has remained an open challenge in robotics. Recent work introduces TopoCut, a unified framework that marries high-fidelity simulation, novel evaluation metrics, and advanced learning algorithms to tackle multi-step cutting tasks with unprecedented robustness and generalization.

What’s New in Robotic Cutting?

Traditional approaches either rely on fixed cutting trajectories or require explicit geometric alignment to assess success, making them brittle in real-world scenarios. Moreover, most methods lack the ability to perceive dense topological changes inherent to sequential cuts. TopoCut overcomes these hurdles by introducing a spectral reward that is invariant to object pose and a perception module that infers hidden topological structure—all within a single, extensible benchmark.

Introducing TopoCut: A Unified Framework

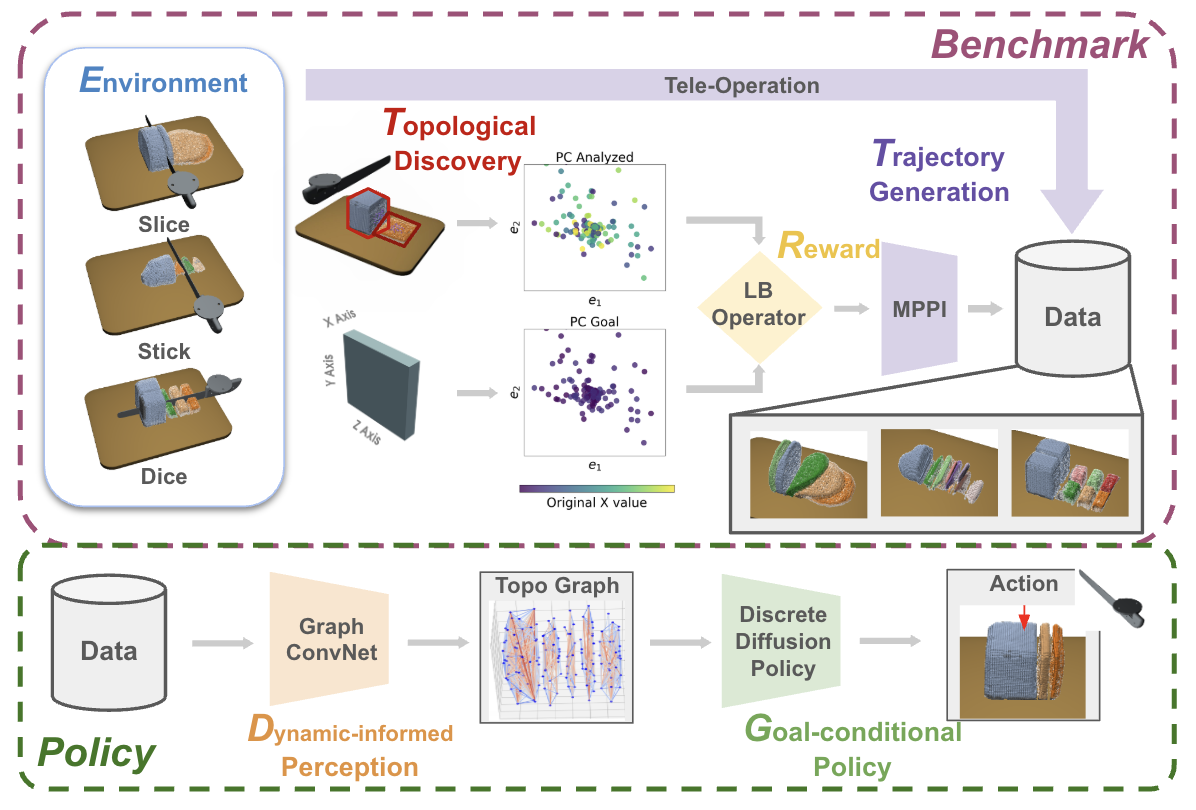

TopoCut is built upon three synergistic components:

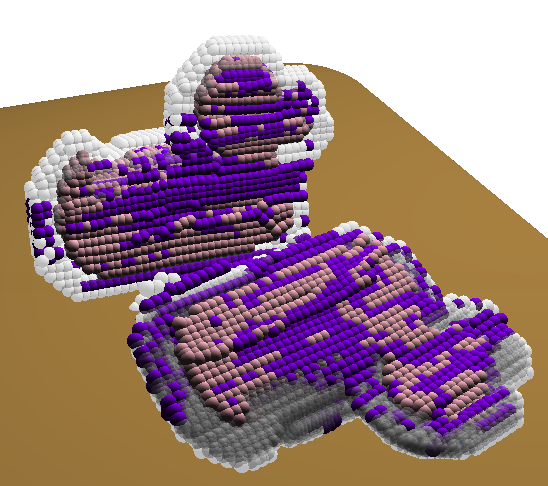

- High-Fidelity Simulation: A particle-based MLS-MPM elastoplastic solver with compliant von Mises models and a damage-driven topology discovery mechanism.

- Spectral Reward: An intrinsic Laplace–Beltrami eigenanalysis-based metric that evaluates shape similarity without requiring explicit alignment.

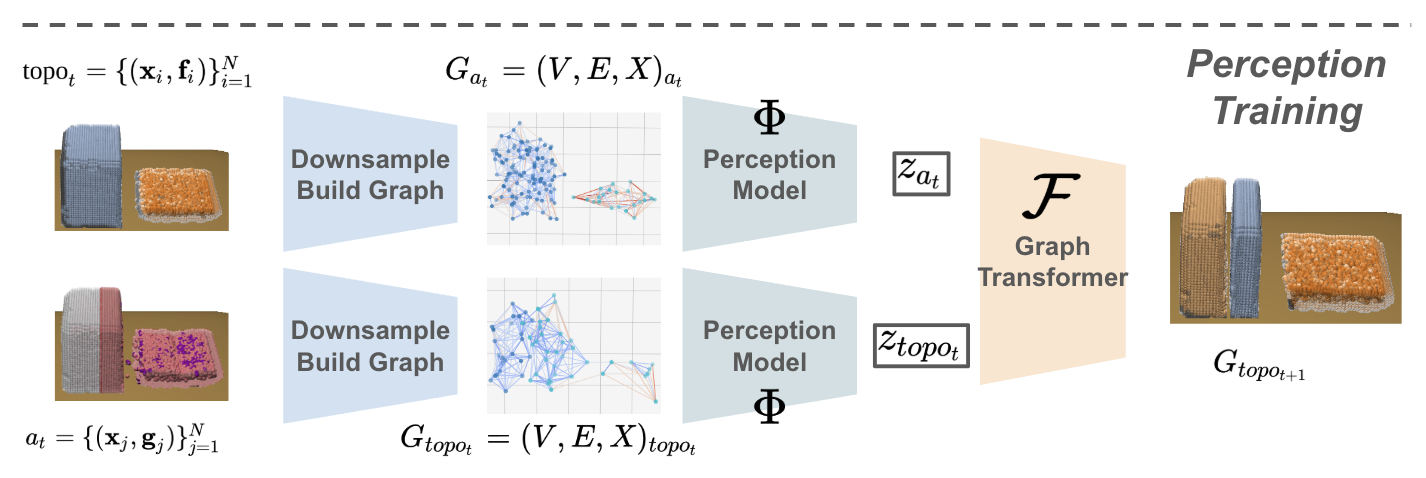

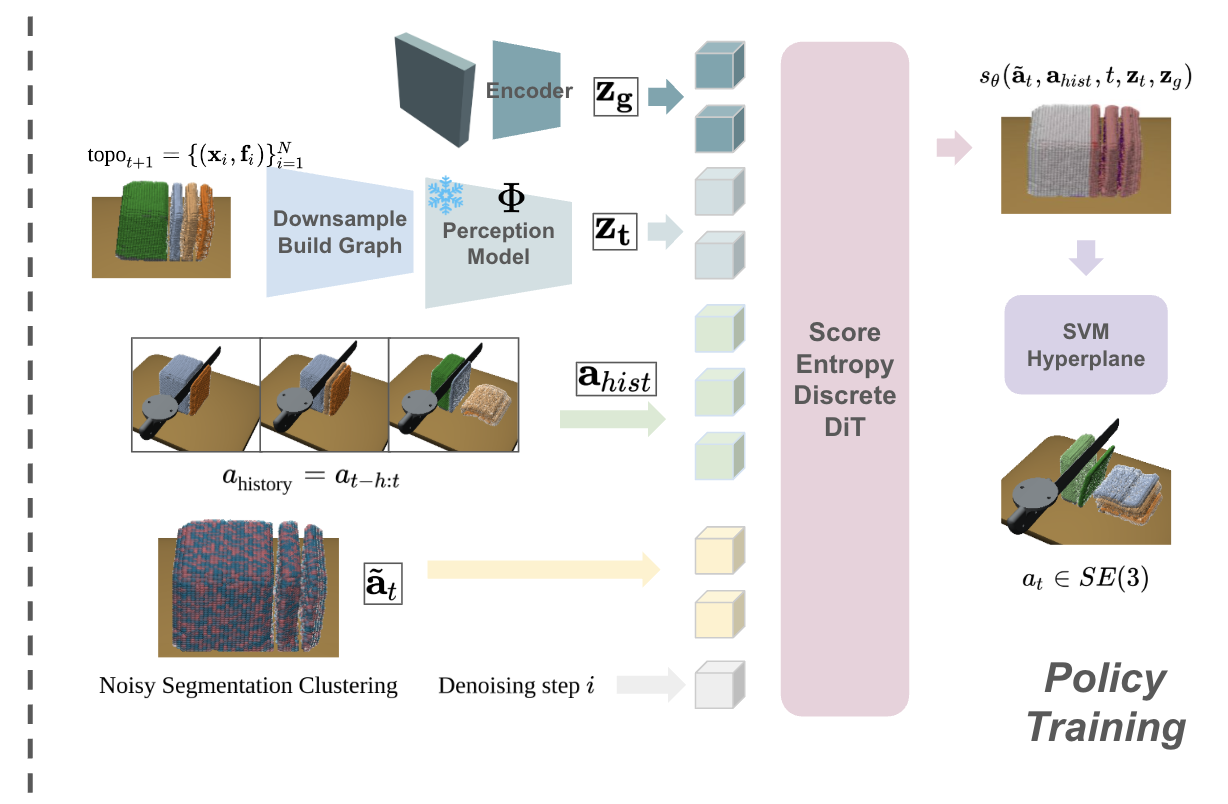

- PDDP Policy Learning: A conditional discrete diffusion policy, powered by dynamics-informed perception embeddings, for generating multi-step cutting actions.

How TopoCut Works

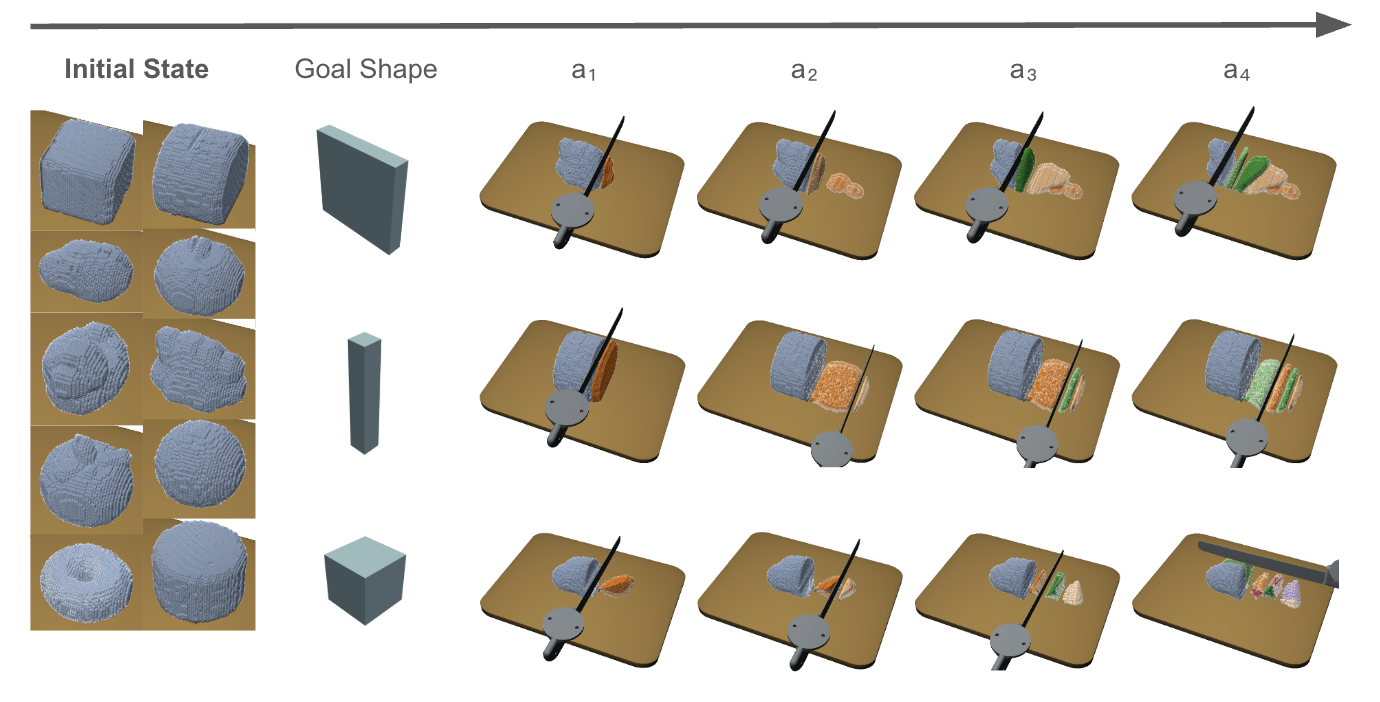

First, expert data is collected via tele-operated and MPPI-guided trajectories in the simulator. The topology discovery module tracks particle damage and reconstructs fragment surfaces. Next, the spectral reward computes a pose-agnostic similarity score to guide planning. A perception encoder then learns to predict the evolution of object topology from depth observations, producing particle-wise embeddings. Finally, PDDP leverages these embeddings to perform a discrete diffusion process, outputting precise cut predictions conditioned on both current and goal shapes.

Why TopoCut Matters

By seamlessly integrating simulation, evaluation, perception, and policy learning, TopoCut establishes a new standard for deformable object manipulation:

- No Manual Alignment: Spectral reward negates the need for cumbersome pose adjustments.

- Topology-Aware Learning: Perception embeddings capture dense structural changes for robust policy conditioning.

- Scalable & Generalizable: Demonstrated success across ten object geometries, varying scales, poses, and cut goals.

Real-World Implications

The TopoCut framework paves the way for advanced automation in industries like food processing, where precise multi-pass slicing is vital; surgical robotics, requiring careful sequential incisions; and manufacturing settings that demand accurate material segmentation—all achieved without extensive human supervision.

Looking Forward

TopoCut not only provides a comprehensive benchmark but also highlights future directions: accelerating mesh reconstruction for online reinforcement learning and extending tasks to more generalized objectives like sculpting or partial shape matching. These advances promise to further close the sim-to-real gap and unlock new capabilities for intelligent robotic manipulation.